AI Penetration Testing

Argus AI Security

We anticipate the future

At Argus AI Red, you'll find a partner dedicated to helping you leverage AI technology to its fullest potential, driving growth and innovation in your organization.

Information security

Adversarial AI Security

Artificial Intelligence (AI) has the potential to revolutionize many industries and change the way we live and work. However, as AI becomes more advanced, so too do the challenges it faces. One of the most significant of these is Adversarial Machine Learning (AML).

The process of AML involves feeding an AI system input data that is designed to trick it into making an incorrect decision. This is often done by adding small, carefully crafted perturbations to the input data that are not noticeable to humans, but cause the AI system to misbehave.

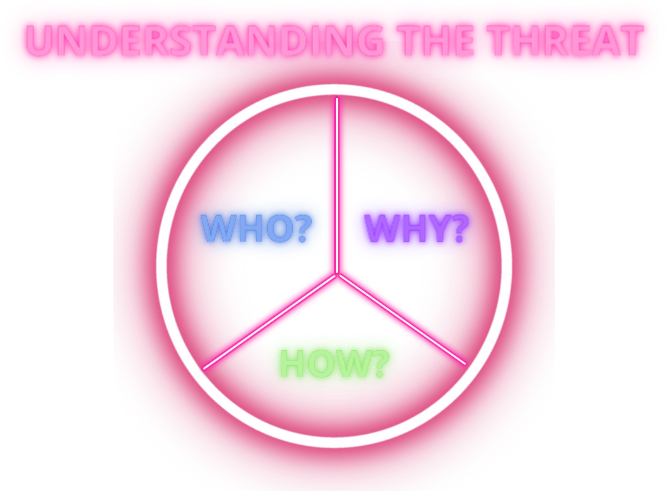

Who? Why? How?

Understanding the threat

To defend against AML, it is crucial to understand the Who/Why/How of the threat in order to take the right steps to keep your organization safe. The development of AI systems that are robust and able to resist adversarial attacks is paramount to long term business sucess. This can be done by a multitude of measures fine-tuned to the threat scenario specific to your oganization.

AML is a growing concern in the AI community, as it refers to the ability of attackers to manipulate AI systems and cause them to make incorrect decisions. This can have serious consequences, such as misclassifying a dangerous object as benign or making a false fraud detection.

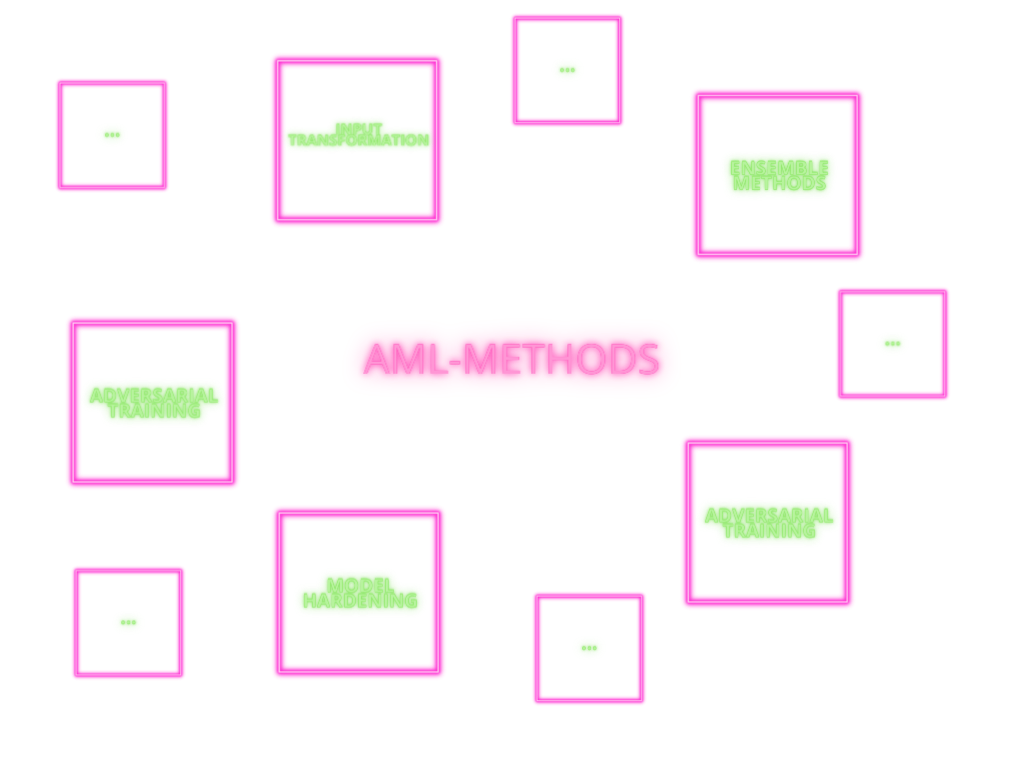

AML-Methods

Understanding the model

At Argus AI we spent a lot of our time by focusing on the “How” of the threat. How can algorithms be broken, tricked, and manipulated and what can be gained from these insights for the further development of machine learning models. Understanding the model and the underlying architecture creates to the ability to anticipate attack strategies and employ them proactively to improve the robustness of the organization.

Combining Resilient AI Power with Organizational Excellence

AI Security Organization

AI security is a dynamic challenge, requiring continuous vigilance against threats like adversarial attacks, data poisoning, and model exploitation. To address these effectively, organizations must move beyond tools and models to establish an AI Threat Response Team seamlessly integrated into their operations.

This team is vital for proactive and reactive security. They monitor AI systems in real time, identify anomalies, and swiftly respond to emerging threats. Beyond immediate defense, they analyze vulnerabilities, counter adversarial tactics, and evolve security protocols to stay ahead of potential attackers. Without an embedded team, organizations risk fragmented workflows, delayed responses, and compromised AI assets.

Argus AI is your trusted partner in building this critical capability. We work with you to design processes and teams tailored to your organization’s needs. Our expertise covers everything from identifying necessary skills and integrating advanced monitoring tools to creating workflows that ensure seamless collaboration between IT, data science, and operations.

With Argus AI, your organization can enhance resilience in an ever-changing threat landscape. By embedding AI security into your core operations, you protect your AI investments and build trust with customers and stakeholders. Secure your AI systems today with Argus AI’s guidance and expertise.

Protect Your Systems from LLM Manipulation and Prompt Injection

LLM Threats

Future-proof your AI systems today with Argus AI—because staying ahead of threats is the best defense.